Logging in Hive

Under the hood Hive uses the Log4j 2 framework for logging. To configure Hive logging processes, use the Custom log4j.properties settings in ADCM. More details on using these settings are available in the Configure logging with ADCM section below.

Logs location and format

By default, Hive stores all logs in the local file system under the root logs directory /var/log/hive.

The root log directory is customizable and is defined by the hive.log.dir Log4j property.

Hive logs are stored as plain text files with the .log/.out extensions.

Most log events have the following structure:

[Timestamp] [Severity] [Thread that generates the event] [Event message]

For example:

2024-01-29T16:26:24,216 INFO [HiveServer2-Background-Pool: Thread-71] ql.Driver: Executing command(queryId=hive_20240129162624_f4dfb3c4-3e27-4113-bbad-d524e54fcc26): drop table if exists hive_main_through_beeline_check_75320

Log types

Hive logs are generated by separate Hive components, such as HiveServer2, Metastore, and Beeline. Each component has its own customizable Log4j configuration that defines the logging behavior for individual components. The major log types generated by Hive components are described below.

HiveServer2 logs

By default, Hive writes HiveServer2 logs to the files named hive-server2.log. These logs reflect the HiveServer2 activity including all the errors and exceptions raised in the HiveServer2 core. For example:

2024-01-30T13:42:04,048 INFO [1bd2c26d-2da1-4bea-aa8d-53e24b21fa9e HiveServer2-Handler-Pool: Thread-60] ql.Context: New scratch dir is hdfs://adh/tmp/hive/hive/1bd2c26d-2da1-4bea-aa8d-53e24b21fa9e/hive_2024-01-30_13-42-02_298_4565019303774254377-2 2024-01-30T13:42:04,097 ERROR [1bd2c26d-2da1-4bea-aa8d-53e24b21fa9e HiveServer2-Handler-Pool: Thread-60] parse.CalcitePlanner: CBO failed, skipping CBO. org.apache.hadoop.hive.ql.parse.SemanticException: Line 1:12 Cannot insert into target table because column number/types are different 'transactions': Table insclause-0 has 4 columns, but query has 1 columns. at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genConversionSelectOperator(SemanticAnalyzer.java:8031) ~[hive-exec-3.1.3.jar:3.1.3] at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genFileSinkPlan(SemanticAnalyzer.java:7576) ~[hive-exec-3.1.3.jar:3.1.3] at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genPostGroupByBodyPlan(SemanticAnalyzer.java:10635) ~[hive-exec-3.1.3.jar:3.1.3] at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genBodyPlan(SemanticAnalyzer.java:10507) ~[hive-exec-3.1.3.jar:3.1.3] at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genPlan(SemanticAnalyzer.java:11420) ~[hive-exec-3.1.3.jar:3.1.3] ...

The hive-server2.out file stores the redirected HiveServer2 output and gets cleaned up during the HiveServer2 startup.

Metastore logs

hive-metastore.log files store information related to Hive Metastore activity. For example:

2024-01-30T14:02:50,819 INFO [main] server.HiveServer2: Starting HiveServer2 2024-01-30T14:02:52,582 INFO [main] service.AbstractService: Service:OperationManager is inited. 2024-01-30T14:02:52,582 INFO [main] service.AbstractService: Service:SessionManager is inited. 2024-01-30T14:02:52,583 INFO [main] service.AbstractService: Service:CLIService is inited. 2024-01-30T14:02:52,583 INFO [main] service.AbstractService: Service:ThriftBinaryCLIService is inited. ...

Also, Metastore logs include audit information. For example:

$ grep 'audit' /var/log/hive/hive-metastore.logThe output:

2024-02-01T00:00:48,327 INFO [pool-6-thread-4] HiveMetaStore.audit: ugi=hive ip=10.92.41.182 cmd=source:10.92.41.182 get_config_value: name=metastore.batch.retrieve.max defaultValue=50 ...

Hive Beeline logs

Log files hive-beeline.log reflect information about the beeline shell operation.

Configure logging with ADCM

With the use of Log4j, configuring Hive logging processes assumes modification of key/value properties in *-log4j.properties files. These files are available at different locations on hosts with corresponding Hive components and Hive reads these files during the startup. However, instead of editing these files manually, ADCM provides convenient settings to update Log4j configurations on all ADH cluster hosts at once. For this:

-

In ADCM, go to Clusters → <your_cluster_name> → Services → Hive → Primary Configuration and enable the Show advanced option.

-

In the Custom log4j.properties section, click the hive-log4j.properties item that corresponds to the Hive component you need and edit the Log4j properties. Log4j properties defined in ADCM UI will extend/overwrite the values in *-log4j.properties files used by Hive. The detailed information about supported Log4j properties can be found in Log4j 2 documentation.

-

Save the Hive service configuration.

-

Restart the Hive service.

|

NOTE

In this article, the "update a Log4j property" action means updating the property through ADCM UI, including Hive restart.

|

Below is the default hive-log4j.properties file used by the HiveServer2 component. The major properties are highlighted to help you get started with Log4j configuration. For detailed reference on supported Log4j properties, see Log4j 2 documentation.

status = INFO

name = HiveLog4j2

packages = org.apache.hadoop.hive.ql.log

# list of properties

property.hive.log.level = INFO (1)

property.hive.root.logger = DRFA (2)

property.hive.log.dir = /var/log/hive (3)

property.hive.log.file = hive-server2.log (4)

property.hive.perflogger.log.level = INFO

# list of all appenders

appenders = console, DRFA (5)

# console appender

appender.console.type = Console

appender.console.name = console

appender.console.target = SYSTEM_ERR

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{ISO8601} %5p [%t] %c{2}: %m%n

# daily rolling file appender

appender.DRFA.type = RollingRandomAccessFile (6)

appender.DRFA.name = DRFA

appender.DRFA.fileName = ${sys:hive.log.dir}/${sys:hive.log.file}

# Use %pid in the filePattern to append <process-id>@<host-name> to the filename if you want separate log files for different CLI session

appender.DRFA.filePattern = ${sys:hive.log.dir}/${sys:hive.log.file}.%d{yyyy-MM-dd} (7)

appender.DRFA.layout.type = PatternLayout

appender.DRFA.layout.pattern = %d{ISO8601} %5p [%t] %c{2}: %m%n (8)

appender.DRFA.policies.type = Policies

appender.DRFA.policies.time.type = TimeBasedTriggeringPolicy (9)

appender.DRFA.policies.time.interval = 1 (10)

appender.DRFA.policies.time.modulate = true

# list of all loggers

loggers = NIOServerCnxn, ClientCnxnSocketNIO, DataNucleus, Datastore, JPOX, PerfLogger, AmazonAws, ApacheHttp (11)

logger.NIOServerCnxn.name = org.apache.zookeeper.server.NIOServerCnxn

logger.NIOServerCnxn.level = WARN

logger.ClientCnxnSocketNIO.name = org.apache.zookeeper.ClientCnxnSocketNIO

logger.ClientCnxnSocketNIO.level = WARN

logger.DataNucleus.name = DataNucleus

logger.DataNucleus.level = ERROR

logger.Datastore.name = Datastore

logger.Datastore.level = ERROR

logger.JPOX.name = JPOX

logger.JPOX.level = ERROR

logger.AmazonAws.name=com.amazonaws

logger.AmazonAws.level = INFO

logger.ApacheHttp.name=org.apache.http

logger.ApacheHttp.level = INFO

logger.PerfLogger.name = org.apache.hadoop.hive.ql.log.PerfLogger

logger.PerfLogger.level = ${sys:hive.perflogger.log.level}

# root logger

rootLogger.level = ${sys:hive.log.level} (12)

rootLogger.appenderRefs = root

rootLogger.appenderRef.root.ref = ${sys:hive.root.logger}| 1 | Sets the root log level.

The possible severity levels are: ALL, TRACE, DEBUG, INFO, WARN, ERROR, FATAL, and OFF.

Events with lower severity are not included in logs.

For example, using DEBUG generates more verbose logs, whereas using ERROR will force Hive to log only errors and more critical events. |

| 2 | Defines the root logger.

The DRFA logger writes events to a file that gets rotated periodically. |

| 3 | Specifies the root directory to store log files. All log files generated using this Log4j configuration will be stored under the specified directory. |

| 4 | Specifies a name for the log file. |

| 5 | Defines a list of Log4j appenders which are responsible for delivering log events to various destinations like a file, a JMS queue, etc. In this configuration, the two default appenders route events to a file and to the console. |

| 6 | Uses the RollingRandomAccessFile appender to write log events to a file that gets rotated periodically. |

| 7 | Specifies a pattern used to rename log files when rotated. |

| 8 | Defines a pattern that converts a log event to a string. |

| 9 | Uses TimeBasedTriggeringPolicy that defines when the log file should be rotated. |

| 10 | Sets the interval (1 day) for rotating log files. |

| 11 | Defines a list of all active loggers. Each logger is responsible for generating log events related to certain functionality. |

| 12 | Defines the root logger properties. In Log4j, logger entities are hierarchical, so the root logger’s properties get inherited by other non-root loggers (unless the inheriting logger overrides logger properties explicitly). |

Common tasks

Below are common tasks that may arise while configuring Hive logging with Log4j.

To change the logging level (defaults to INFO), use the property.hive.log.level property.

You can set a root log level which will be inherited by non-root Log4j loggers, or you can set log levels individually for a specific logger, for example logger.PerfLogger.level = <OTHER_LEVEL>.

By default, Hive logs are stored under the /var/log/hive/ directory.

To change this directory, modify the property.hive.log.dir property.

In Log4j, each logger entity is responsible for generating log events related to certain functionality.

To exclude specific events from logs (for example, PerfLogger events), remove the corresponding logger from the loggers = … definition list, or set a higher severity for that logger.

You can include Hive query/session IDs into HiveServer2 logs.

For this, modify the appender.DRFA.layout.pattern property in the HiveServer2 hive-log4j.properties configuration as shown in the example below.

appender.DRFA.layout.pattern = %d{ISO8601} %5p [%t] %c{2}: QueryID: %X{queryId}; SessionID: %X{sessionId} %m%nAfter this, Hive query/session IDs will be available in log events, for example:

2024-02-01T17:17:01,175 INFO [5818afd9-10b9-4d59-9efc-33fb4b5d2c2c HiveServer2-Handler-Pool: Thread-49] exec.SelectOperator: QueryID: hive_20240201171700_bdc1be52-6070-41f9-bb5d-d9b2c33dd24b; SessionID: 5818afd9-10b9-4d59-9efc-33fb4b5d2c2c SELECT struct<txn_id:int,acc_id:int,txn_amount:decimal(10,2),txn_date:date> 2024-02-01T17:17:01,175 INFO [5818afd9-10b9-4d59-9efc-33fb4b5d2c2c HiveServer2-Handler-Pool: Thread-49] exec.ListSinkOperator: QueryID: hive_20240201171700_bdc1be52-6070-41f9-bb5d-d9b2c33dd24b; SessionID: 5818afd9-10b9-4d59-9efc-33fb4b5d2c2c Initializing operator LIST_SINK[3] ...

By default, Hive logs get rotated on a daily basis.

To change the rotation policy and make Hive additionally rotate logs based on size, use the SizeBasedTriggeringPolicy.

The following properties instruct Log4j to rotate the log file upon reaching a specific size threshold (10 MB).

appender.DRFA.policies.size.type = SizeBasedTriggeringPolicy

appender.DRFA.policies.size.size = 10MB

# '%i' is mandatory if SizeBasedTriggeringPolicy is used with TimeBasedTriggeringPolicy:

appender.DRFA.filePattern = ${sys:hive.log.dir}/${sys:hive.log.file}.%d{yyyy-MM-dd}-%i

When a log file gets rotated, you might want to compress the "old" log file.

For this, specify the desired compression format within the filePattern property as shown in the following example.

If filePattern ends with ".gz", ".zip", ".bz2", ".deflate", ".pack200", ".zst", or ".xz", the resulting archive will be compressed using the compression scheme that matches the suffix.

# '.gz' ensures that the files will be compressed using gzip

appender.DRFA.filePattern = ${sys:hive.log.dir}/${sys:hive.log.file}.%d{yyyy-MM-dd}-%i.log.gzView Hive logs in web UI

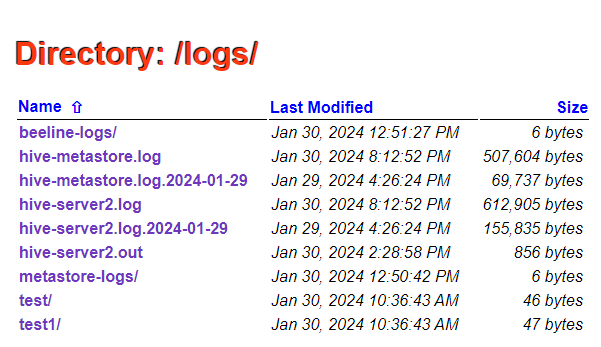

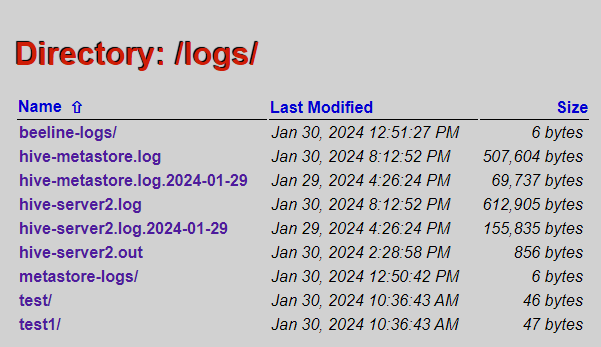

HiveServer2 web UI allows you to view plain-text logs on the Local logs page.

This page lists the contents of the root logs directory (defined via hive.log.dir) where you can navigate between log files and view them as plain text.