Replication factor

The HDFS replication factor is a parameter that describes how many copies of a file to store in HDFS. When a file is created, it’s divided into blocks, fixed-sized pieces of data comprising the file. Each block of that file is then copied as many times as the assigned replication factor. The default HDFS replication factor is 3.

For more information about the replication, see the HDFS architecture article.

Change the replication factor

You can change the replication factor in HDFS either for the whole system, for a single file, or for a directory.

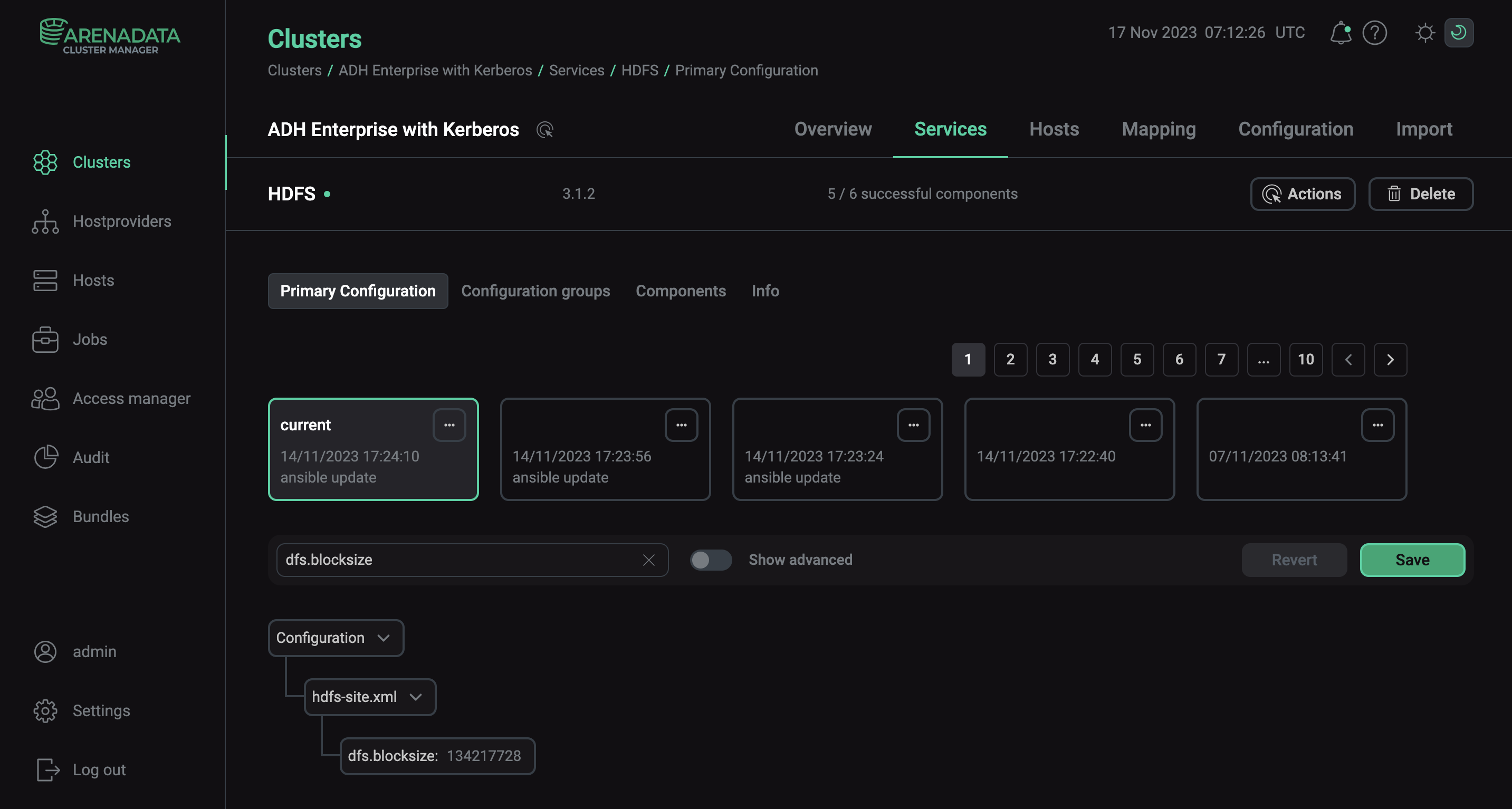

To change the default replication factor, update the value of the dfs.replication parameter in the hdfs-site.xml configuration file. To do this using ADCM:

-

On the Clusters page, select the desired cluster.

-

Go to the Services tab and click at HDFS.

-

Find the dfs.replication parameter field, enter the new default replication rate value and click Apply. Confirm changes to HDFS configuration by clicking Save.

-

In the Actions drop-down menu, select Restart, make sure the Apply configs from ADCM option is set to true and click Run.

To set the replication factor for a file or a directory, use the setrep command:

$ hadoop fs -setrep <numReplicas> <path>Where:

-

<numReplicas>— the number indicating the replication rate for a file or a directory. -

<path>— a path to a file or a directory. If a path to a directory is provided, the command changes the replication factor of all files under the directory, child directories included.

|

NOTE

When the replication factor for a directory has been changed, it will apply only to the new files added to the directory. |

Increased replication

When the default replication factor has increased, the NameNode will mark all the blocks as underreplicated and initiate replication. Make sure that the replication factor is not higher than the number of DataNodes in the cluster.

Decreased replication

When the replication factor is reduced, the NameNode sends instructions to DataNodes on which blocks to delete. It might take some time for the DataNodes to delete the blocks and report on the new available disk space.

You can set the replication factor to be 1. This will decrease HDFS fault tolerance, but it can be necessary, for example, for single node clusters. If you want to set the replication factor 1 to save the resources, consider implementing storage policies or use erasure coding instead.

Set the block size

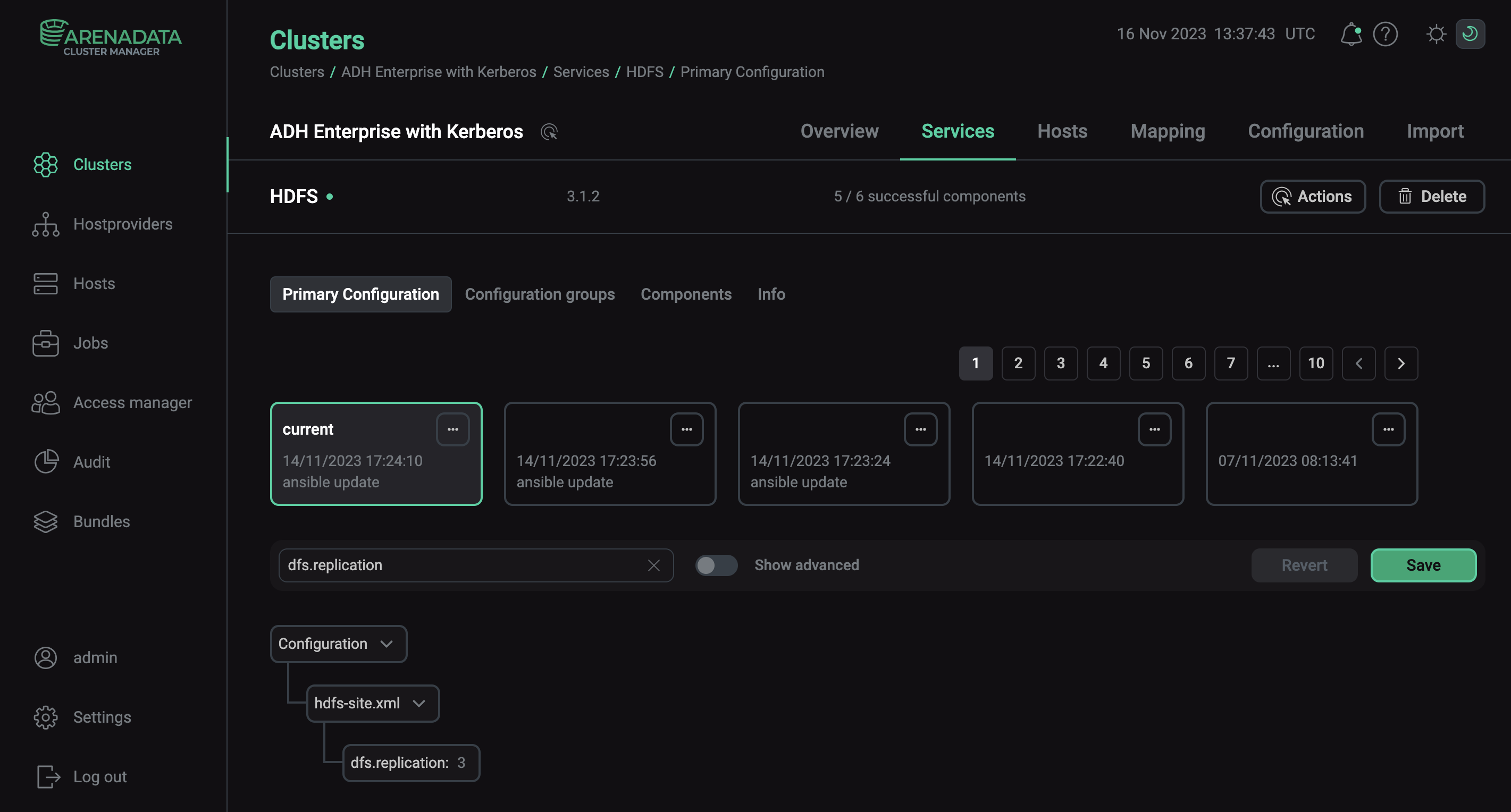

The default block size is 128 MB. You can change the block size for HDFS in the dfs.blocksize parameter in the hdfs-site.xml configuration file. To do this using ADCM:

-

On the Clusters page, select the desired cluster.

-

Go to the Services tab and click at HDFS.

-

Find the dfs.blocksize parameter field, enter the new block size in bytes and click Apply. You can also use contractions, such as

kfor kilobytes,mfor megabytes, andgfor gigabytes. -

Confirm changes to HDFS configuration by clicking Save.

-

In the Actions drop-down menu, select Restart, make sure the Apply configs from ADCM option is set to true and click Run.

The new block size will be applicable for all the new files added to HDFS.